Sharded Counters: How Facebook Scales Like Buttons to Billions

Hello friend 👋

Ever wonder how Facebook manages to track billions of Likes every day — without their database just straight-up quitting its job and moving to a beach in Bali?

Because let’s be honest: if you or I tried to count a billion “Likes” in real time using a standard SQL table, we’d be DDoS’ing ourselves into oblivion. The server would overheat, the disk would start crying, and someone in ops would probably get paged at 3 a.m. with a “Counter table locked again 😭” alert.

But Facebook? Smooth as butter. Not a single crash (at least, not because of Likes).

So what’s their secret sauce?

Grab a cup of coffee, because we’re about to go deep into the world of sharded counters and eventual consistency, with some real-world examples you can actually use — whether you’re building a startup or just want your app to stop melting.

🤯 The Problem With Counting Things

Let’s start with something simple.

Imagine you’re building a small social app. Your schema looks like this:sql CREATE TABLE posts ( id SERIAL PRIMARY KEY, content TEXT, like_count INTEGER DEFAULT 0 );

Now, every time someone taps “Like”, you do this

UPDATE posts SET like_count = like_count + 1 WHERE id = 42;Seems fine, right? But here’s the thing — this works perfectly until you’re popular. And when I say “popular”, I mean Facebook levels of popular. Like, “Oprah just shared your post” popular.

Suddenly, thousands of users are liking the same post at the same time, and your humble little database table is getting hammered like a piñata at a toddler’s birthday party.

And what happens next?

- Lock contention: All those updates are trying to grab a lock on the same row.

- Performance nosedive: Your latency spikes like Bitcoin in 2021.

- Possible downtime: Congrats, your “Like” button just became a denial-of-service vector.

So… how does Facebook not implode?

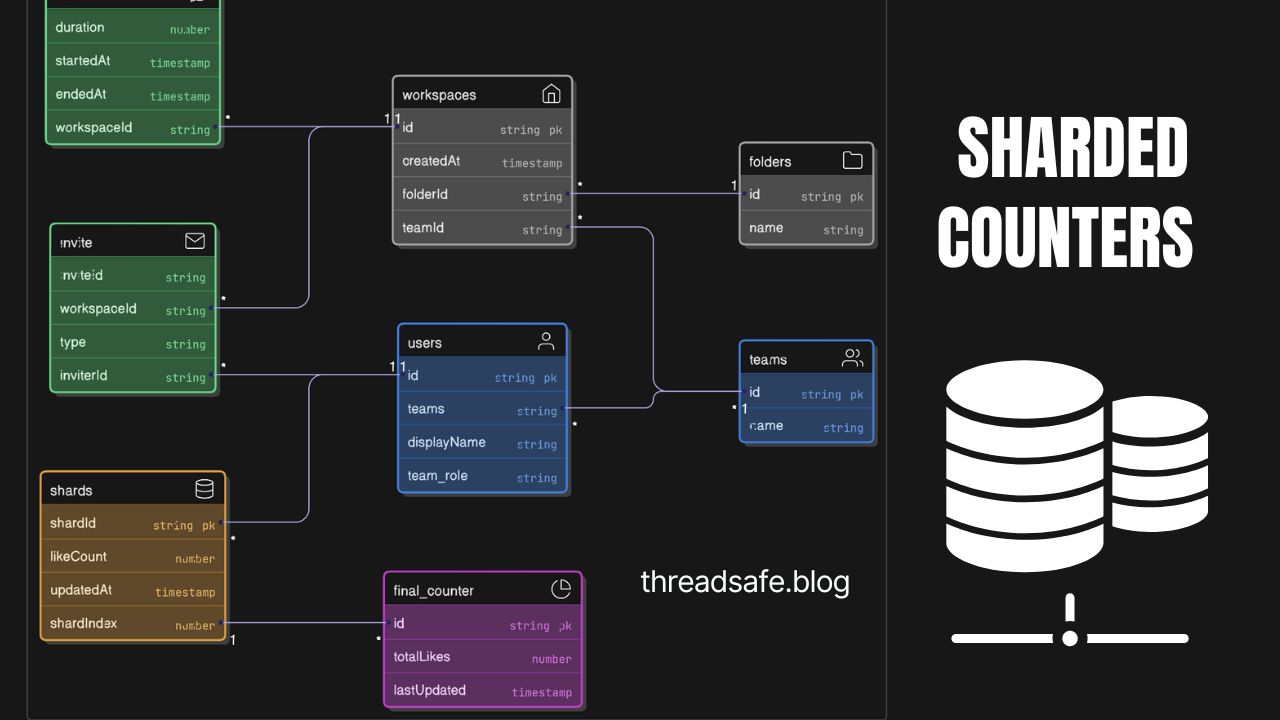

Enter Sharded Counters (a.k.a. Divide and Count Conquer)

The idea is genius-level simple: don’t keep one counter — keep many.

Instead of updating one like_count field, Facebook breaks the counter into multiple shards (small pieces) that can be updated independently, and then aggregated when needed.

How it works:

- You create a bunch of “shards” for the counter, like 100 small buckets.

- Each time someone likes a post, you randomly pick a shard and increment that.

- To display the total likes, you sum all the shards.

A Schema Example

CREATE TABLE post_likes_shards (

post_id BIGINT,

shard_id INT,

like_count INT DEFAULT 0,

PRIMARY KEY (post_id, shard_id)

);When a user likes a post

UPDATE post_likes_shards

SET like_count = like_count + 1

WHERE post_id = 42 AND shard_id = 7;To get the total

SELECT SUM(like_count)

FROM post_likes_shards

WHERE post_id = 42;Boom. Now your writes are spread out, your database is happy, and Oprah can like as many posts as she wants.

Eventual Consistency: A Trade-Off for Performance

Understanding Eventual Consistency

The key to Facebook’s scalability lies in eventual consistency. This concept allows for a slight delay in accuracy, but ensures that the system can handle massive scale.

Eventual consistency means that the system doesn’t guarantee real-time accuracy, but over time, all the shards will converge to the correct total count.

In practice:

- Facebook doesn’t worry if the like count is a few likes off for a few seconds.

- What matters is that the app remains fast and scalable.

This trade-off between availability and strict consistency is the secret sauce behind large-scale systems like Facebook.

Real-World Example You Can Use Today

Let’s say you run an online course platform (we’ll call it BrainBoost 🧠⚡).

You want to show how many times a lesson has been viewed. Easy, right?

Well, until you get featured on Hacker News.

Instead of this naive schema

CREATE TABLE lessons (

id SERIAL PRIMARY KEY,

title TEXT,

view_count INT

);Do this:

CREATE TABLE lesson_views_shards (

lesson_id BIGINT,

shard_id INT,

view_count INT DEFAULT 0,

PRIMARY KEY (lesson_id, shard_id)

);- Create 50 shards per lesson.

- Randomly update one shard on each view.

- Use caching (e.g., Redis) to store the total count and refresh every N seconds.

Result: Your service can scale like a champ, your DB won’t cry, and your users get real-time-ish stats that are good enough to impress their manager.

Tips for Building Your Own Sharded Counters

Here are a few tips from the trenches:

1. Choose the Right Number of Shards

- 10 is fine for small traffic.

- 100 or 1,000 for higher scale.

- Make it configurable so you can tweak it later.

2. Randomize the Shard on Write

Use something like

import random

shard_id = random.randint(0, 99)This spreads the load evenly.

3. Cache the Aggregated Count

Don’t SUM() shards every time someone loads the page. That’s just rude to your DB.

Instead:

- Use Redis or a fast in-memory cache.

- Refresh the count every few seconds or on schedule.

💡 Related: If you’re into scaling systems and real-time data flows, check out Redis Isn’t Just a Cache. It dives into how Redis powers real-time counters, rate limiting, and more — perfect if you’re building anything snappy or user-facing.

4. Use Background Jobs for Aggregation

Set up a cron job or worker to periodically recompute and store the total like/view counts.

Bonus: When Not to Use Sharded Counters

Not every counter needs to be sharded.

Don’t shard if:

- You have low traffic or a small user base.

- The counter isn’t updated frequently.

- You need exact accuracy at all times (e.g., inventory for e-commerce).

Sharded counters are best when:

- You’re optimizing for massive concurrency.

- The number can be eventually correct.

- You want blazing fast writes with no bottlenecks.

So, Can I Do This Without Facebook-Sized Infra?

Yes, 100%.

Sharded counters are like sourdough starter: everyone thinks it’s complicated until you realize it’s just flour, water, and time.

Whether you’re using:

- PostgreSQL

- MySQL

- MongoDB

- DynamoDB

- Redis

…you can implement a version of this strategy. In fact, Firebase and DynamoDB both have docs on this exact pattern. If it works for them, it’ll work for you.

FAQ: All You Need to Know About Sharded Counters

What is a sharded counter?

A sharded counter splits a counter into multiple smaller counters or shards, which are updated independently. When a user performs an action (like clicking “Like”), the system randomly increments one of the shards. The total count is obtained by summing the values of all the shards.

Why Not Use a Single Counter?

Because databases don’t like it when thousands (or millions) of people try to update the same row at the same time.

A single counter can’t scale when multiple users update it at the same time. It leads to lock contention, high latency, and potential crashes. Sharded counters distribute the load, making writes faster and more scalable.

What is eventual consistency?

Eventual consistency means the system doesn’t guarantee that all reads will return the most recent write immediately, but over time, all nodes will converge to the same value.

In our case: if you count the number of Likes a second after someone clicks “Like”, it might still show 999 instead of 1000. But wait a few seconds, and it’ll catch up.

It’s like your brain processing bad news — it takes a second, but eventually, you get there. 🧠

🧠 Is Facebook really using this in production?

Yes! Facebook, Google, Twitter, and many other large-scale platforms have published engineering blogs discussing how they use sharded or distributed counters and eventual consistency to handle massive scale.

When billions of users are clicking things every day, there’s simply no way to scale using a single-row counter.

🗣 Final Thoughts:

So next time you hit “Like” on a post, take a moment to appreciate the engineering magic happening under the hood. Billions of clicks. Billions of updates. No explosions.

And now you know how to pull off the same trick — even if you’re not Facebook.

So go forth and shard those counters, cache those sums, and make your database feel like it’s on vacation (instead of the verge of burnout).

📬 Over to You!

Have you implemented sharded counters or eventual consistency in your app? Or perhaps your DB had a breakdown under heavy load? I’d love to hear about it. Share your experiences with me on Twitter.

Stay threadsafe,

— Your friendly neighborhood backend whisperer 🧙♂️